Deep learning efficiency research

Post-training optimization, calibration, and reprameterization

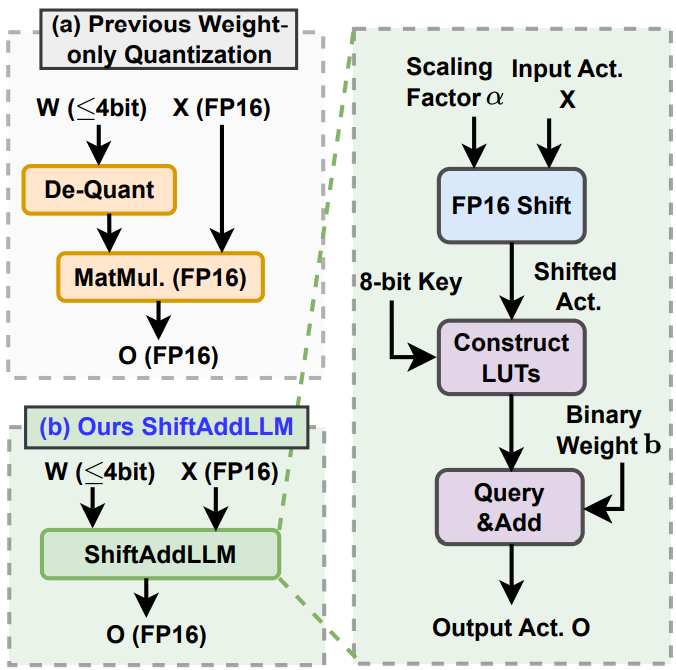

This research thrust focuses on democratizing AI via various optimization, calibration, and reprameterization of pre-training large foundation models for their efficient deployment on extremely resource limited hardware. Some of the research outcome of this project includes: ShiftAddLLM, GEAR, dynamic network rewiring

We explore different avenues in yielding efficient models, including model reparameterization, quantization, sparse learning.

Some of the notable public highlights are: GEAR highlight, ShiftAddLLM highlight by AK in Huggingface