LLM personalization and long-context learning

Efficient fine-tuning and long-context understanding

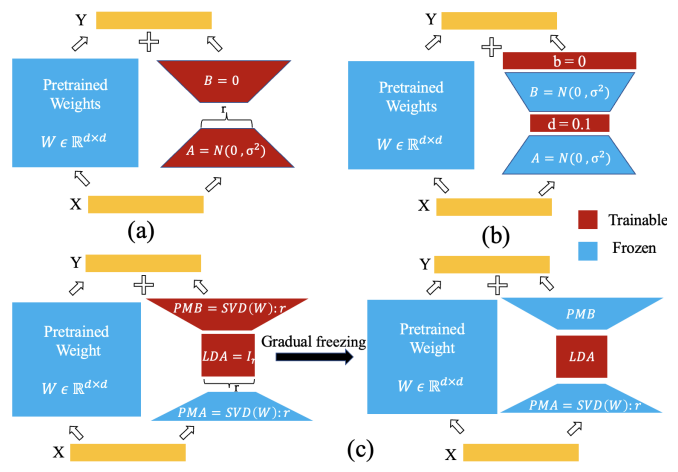

This research thrust focuses on enabling personalization of foundation models through memory efficient fine-tuning solutions. Some of the research outcome of this project includes: LaMDA, AFLoRA, efficient long-context understanding without forgeting in the middle.

We explore different avenues for memory efficient finetuning. Additionally, we explore efficient deployability on long-context understanding tasks of LLMs.